Like other spatial tree data structures, this not-so-hidden gem is used to optimize spatial queries by quickly ruling out large portions of the search space. More importantly, the Rhinocommon implementation is flexible and super straightforward to use. Simply insert objects into the tree, search the tree with a callback method of your choice, and go spend the several hundred milliseconds you just saved with your loved ones. Here's an example in Grasshopper where it's used to speed up the removal of duplicate points. If you turn on the profiler widget you'll see how it stacks up against a brute force approach.

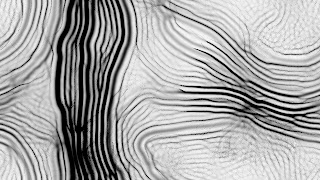

Anyways, this discovery led to several adaptive packing experiments shown below. Here the RTree class is being used to manage collisions among a dynamic population of spheres which are attracted to an input surface (nurbs in the first video implicit in the second two). A new sphere is added to the population if the average pressure felt by the existing spheres falls below a given threshold. Similarly if the average pressure exceeds a second higher threshold a sphere is removed. The RTree callback method used deals with both the calculation/application of repulsion forces and the recording of pressures across the system.

In each case, the result is a near uniform distribution of spheres across the given surface. While you could run the same process without the use of a spatial tree, things could get out of hand pretty quickly since the algorithm is essentially modifying its own input size each iteration. The RTree just ensures that the execution time scales more reasonably in proportion to this input size - preventing the whole thing from going up in smoke.

Platforms: Rhino, Grasshopper, C#